BLOG: Reducing Latency in the online video world

In today’s on-demand driven world latency can be a big issue, especially when it comes to online gaming, live sports and virtual reality.

Kevin Staunton-Lambert, Solutions Architect at Switch Media shares how to reduce latency in the modern online video world.

Science enthusiast or not, many of you are aware that we recently celebrated the 50th anniversary of the historic Apollo 11 moon landing. On July 16, 1969 at 9:32am EDT, millions of viewers crowded around their televisions to watch four astronauts ascend from NASA’s Kennedy Space Center in Florida to make their incredible journey to the moon. This was a feat in many senses —in both engineering and broadcast terms.

When the Eagle landed, television was broadcast via analogue signals. There was no streaming and the average latency with traditional TV watching (as many of us grew up with) was a mere three seconds. This meant that every viewer watching the moon landing saw events unfold at almost the exact same time. Since that historic day only ten more people have stepped foot on the moon, but television however has changed dramatically. With the rise of over-the-top television in the past decade, the average latency can now be as great as 42 seconds (yes, you read that correctly), especially when it comes to delays on mobile apps.

In today’s on-demand driven world, that amount of time lag is unacceptable with consumers, and on an enterprise level, even milliseconds can make a world of difference (think live sports betting!) It’s interesting that since the moon landing, we’ve come so far in the evolution of video, yet latency problems have increased.

When we’re talking about latency we’re actually talking about two kinds: real and perceived. Let’s use a live sports match as an example. Latency can be seen clearly if you’re in the stadium at a game and also watching that game on your mobile device – this includes latency generated in processing vision and audio from analogue into a digital form.

Whereas perceived latency refers to the content viewers receive as a shared experience, but doesn’t include the latency around encoding. Some routes are faster than others when processing video, with satellite networks generally being faster than OTT.

How can we reduce live latency?

Since OTT live services began, latency has come down, but only by a fraction. The biggest challenge for content providers is to ensure that the OTT experience they’re providing matches as closely as possible to that of the linear TV broadcast experience.

When it comes to real-time applications with things like virtual reality, online gaming, and live sports, latency is a big issue. During sports matches, fans often post live updates on social media. Being able to witness the action at the same time as everyone else is important to viewers because it means they don’t necessarily have to be at the game to get a real-time feel for it. If someone is kicking a penalty and you don’t see it on your sports app until 10 or 15 seconds after it happens, it can make for an unhappy fan. In online sports betting, latency is more critical than most other applications. If you’re 15 or 20 seconds delayed, you can easily miss a bet. Every second can amount to an intangible cost and can seriously devalue interactive and live betting as well as online auctions.

When it comes to real-time applications with things like virtual reality, online gaming, and live sports, latency is a big issue. During sports matches, fans often post live updates on social media. Being able to witness the action at the same time as everyone else is important to viewers because it means they don’t necessarily have to be at the game to get a real-time feel for it. If someone is kicking a penalty and you don’t see it on your sports app until 10 or 15 seconds after it happens, it can make for an unhappy fan. In online sports betting, latency is more critical than most other applications. If you’re 15 or 20 seconds delayed, you can easily miss a bet. Every second can amount to an intangible cost and can seriously devalue interactive and live betting as well as online auctions.

So, how can we combat this? One way is to throw money at the problem. Buy better cameras, buy faster hardware and pay for greater bandwidth. But we all know this isn’t always possible. Thankfully, there are technologies on the market today that help latency issues; the AV1 codec is an obvious one. Only three years old, this format was designed to better handle higher resolution video and can provide high–quality video at bitrates of about 50 percent less than H.264.

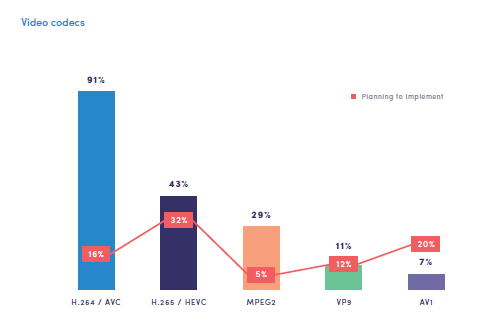

Bitmovin’s recent Video Developer Report 2019 shows that H.264 is still the most used video format in 2019 with 91 percent of respondents confirming that it’s their current codec of choice. But the report also highlights that one in five developers plan to implement AV1 in 2020 and over half of the survey participants have realistic and achievable latency expectations of less than five seconds. Though H.264 is the current front-runner, I believe the AV1 codec will do well in this market because a lot of hardware vendors have started to embrace it. Take Broadcom, for example, who just a couple of weeks ago introduced a new chip which is likely to be a game-changer. It supports the AV1 codec and integrated Wi-FI 6 and it’s designed for satellite, cable, and IPTV set-top boxes.

Further to this is Apple’s HLS, a streaming protocol implemented as part of its iOS software. In the early days of HLS (10 second segments) the fastest latency was 30 seconds plus the real world latency mentioned earlier. This meant that 45 seconds to a minute was quite normal. Apple changed this to six seconds but that’s only marginally better. In June this year Apple announced low latency HLS at its Worldwide Developers Conference. This works by adding partial media segment files and, because each segment is short, it can be packaged, published and added to the media playlist, reducing latency from six seconds to 200ms. Check out this example of our low latency stream using Apple’s HLS (to view this 3 second low latency example you’ll need iOS/iPadOS/tvOS 13 or macOS Catalina). For a scalable global distribution of a live feed this example shows Apple Low latency over a real CDN.

Others have made significant progress too. We’d be remiss if we didn’t mention Red Bee Media’s success in achieving a record low latency this year on their live OTT feed of 3.5 seconds, glass to glass using CMAF. The company demonstrated this milestone at NAB earlier this year.

Indeed, there is an overall lack of agreement in the market in terms of codecs as to the best way forward. One thing is for sure, it’ll definitely be interesting to see how this all plays out!

NASA is planning the next moon landing for 2024, I wonder if we’ll all be watching it at the same time.